Get our insight into best practice and recommended techniques for collecting and using learner feedback.

The Ultimate Guide to Training Evaluation

Unlock the power of your learner feedback

How AI is transforming training feedback analysis

Turning unstructured training feedback into meaningful, data-driven insights

How to use customer reviews to market your training courses

A best practice guide to making the most of your reviews

7 common mistakes in training evaluation

(and how to avoid them)

Member Benefits

Exclusive discounts and benefits from our partner organisations and customers

How to get CPD accredited with the CPD Standards Office

20% discount on all CPD accreditation packages for Coursecheck customers

Flexible eLearning platform from Maguire Training

15% discount on eLearning packages for Coursecheck customers

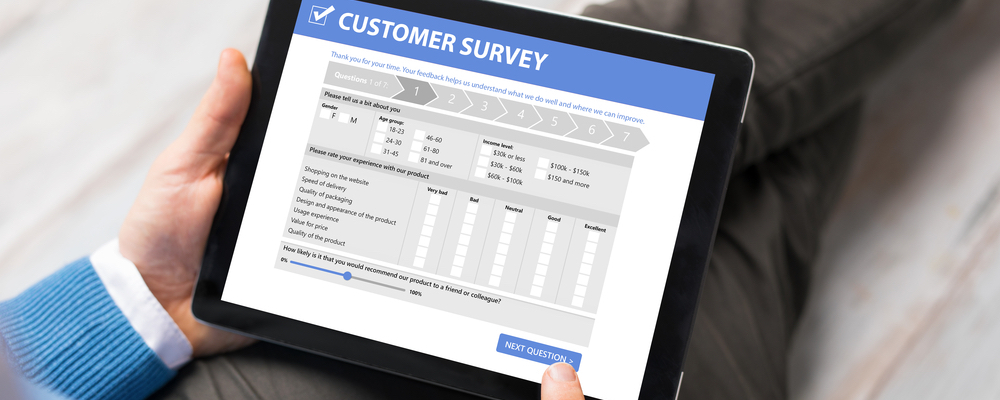

Designing effective feedback forms to give you insights you can act on

A best practice guide for Learning & Development teams

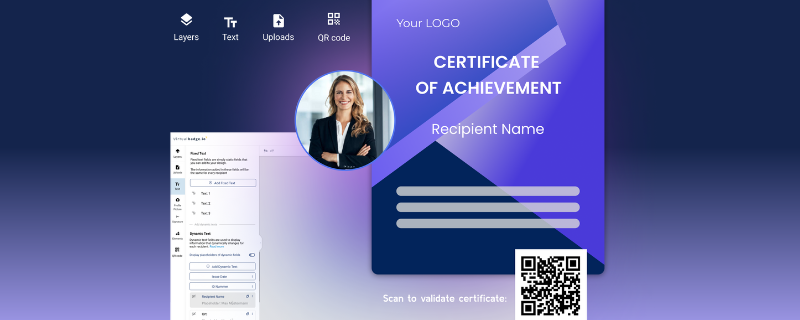

Streamline your certificate management with Virtualbadge

20% off Virtualbadge subscriptions for Coursecheck customers

How to ask for training feedback (and boost your response rates)

If you’re struggling to collect training feedback that gives you actionable insights, follow these best practice tips

CLO100: The Home of Learning Leaders

Exclusive discounts for Coursecheck customers for the Learning Leaders Programmes and CLO Community Membership

Coursecheck & Arlo: Integrate training evaluation with course management

Learn how to integrate training evaluation with course management

Will Thalheimer’s Learning Evaluation Workshops

Up to 30% discount for Coursecheck customers

Moving your classroom courses online?

Our six best practice suggestions for making a successful switch

Apprenticeship Training Providers

Capture and analyse learner voice using a purpose-built feedback tool

Analysing feedback on Coursecheck

See the big picture without losing sight of the details that matter

Notes for instructors using Coursecheck

If you're an instructor collecting feedback at the end of a course, here's what you need to know

What's a good Net Promoter Score in the Training Industry?

How Does Your Net Promoter Score Compare to Your Training Industry Counterparts?

Installing the Coursecheck widget

How to integrate your reviews with your website using the Coursecheck widget

How to make your feedback matter

Collecting feedback is the easy bit. Using it to make a difference is harder

How to get high quality customer feedback

Quality or quantity? Which would you rather have in your course feedback?

What makes a good feedback form?

Start with the end in mind and define the business questions you want to answer

Getting started with Coursecheck

You've signed up for a free trial. What happens next?

Why should training businesses go digital?

Five reasons to ditch paper feedback forms and go digital

Responding to customer feedback

Six tips for responding to customer feedback (good and bad)

Choosing the right reviews platform

Six things to consider when choosing a reviews website to promote your training

How important are reviews in the world of B2B training?

Online reviews are no longer just a B2C thing. They matter for B2B businesses too